Have you ever wondered how your smartphone or smart speaker understands and responds to you? Or how it is possible to have a real-time conversation with someone speaking a different language and understand them almost instantly? Behind it is an exciting and rapidly developing technology – artificial intelligence (AI). Artificial Intelligence) based voice chat. This tutorial will take you step-by-step through how this technology works, from AI voice agents to practical uses.

What are AI Voice Agents and how do they work?

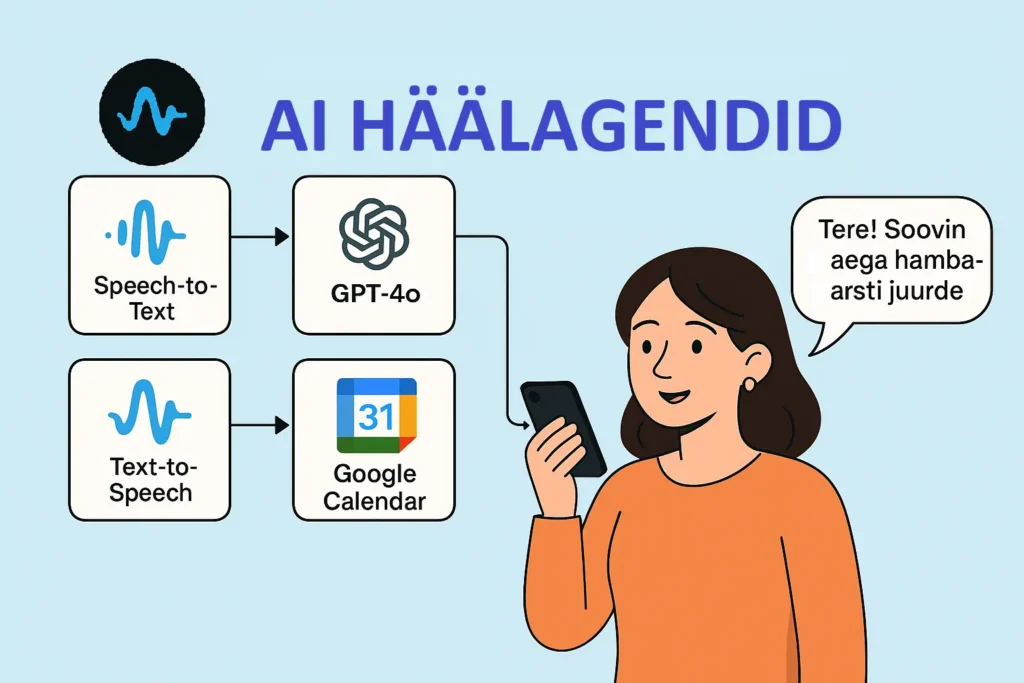

AI voice agents, also known as virtual assistants or chatbots, are software programs designed to understand and respond to human speech. Imagine having an invisible assistant who listens to your commands, answers your questions, and even performs simple tasks—all using your voice.

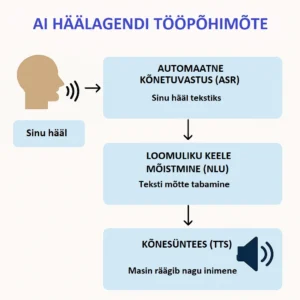

The working principle of these agents is built on three main technological pillars: automatic speech recognition (ASR – Automatic Speech Recognition), natural language understanding (NLU – Natural Language Understanding) and speech synthesis (TTS – Text-to-Speech). Let's take a closer look at these components.

Three Pillars: ASR, NLU and TTS

Automatic Speech Recognition (ASR): Your Voice to Text

The first step in AI voice chat is to convert the words you speak into text that the computer can process. This is done by automatic speech recognition, or ASR. Think of it as a digital stenographer that listens to you and writes everything down.

How does it work? ASR systems are trained on huge amounts of data, including different voices, accents, and speech patterns. They analyze the sound waves of your voice, extract phonemes (the smallest units of speech) from them, and compare them to patterns in their database. As a result, your speech is converted into text form.

Example: When you ask your smartphone: “What is the weather like today?”, the ASR’s job is to convert that sound wave into the text: “What is the weather like today?”.

Natural Language Understanding (NLU): Capturing the Meaning of Text

Now that we have the text, the AI needs to understand what you actually meant to say. This is where natural language understanding, or NLU, comes in. This is the part of the AI’s brain that interprets the meaning, intent, and context of the text.

How does it work? NLU uses sophisticated algorithms and machine learning models to analyze sentence structure, identify key words, and understand their relationships. It can distinguish between questions, commands, and statements, and even detect your mood (for example, whether you are happy or angry).

Example: If ASR has changed your question to “What is the weather like today?”, NLU understands that you want to know the current weather forecast for your location.

Text-to-speech (TTS): Machine Speaks Like a Human

The final step is to give the AI a voice so it can respond to you. This is done by text-to-speech (TTS). This technology turns a text response into natural, understandable human speech.

How does it work? Modern TTS systems use deep learning and neural networks to create voices that are increasingly similar to real human voices. They can mimic different tones, intonations, and even emotions, making communication with AI more natural.

Example: After NLU understands your weather query, the AI looks for an answer (for example, “Today is sunny and 20 degrees”) and TTS converts this text into an audible response.

AI Voice Agents' Use Cases and Increased Efficiency in Businesses

Artificial intelligence (AI)-powered voice agents are quickly becoming an indispensable tool for businesses, offering significant efficiency gains and new opportunities in customer interactions and internal processes. Their ability to handle high call volumes, provide 24/7 service, and analyze data makes them a valuable investment in almost every sector.

1. Customer Support and Service:

AI voice agents are great at providing first-line customer support. They can answer repetitive questions, route calls to the right departments, resolve simple issues, and provide general information. This frees up human resources to handle more complex and specific queries, improving both the customer experience and reducing wait times. Voice agents can provide 24/7 support, which is especially important for companies that operate globally.

2. Booking systems:

Restaurants, hotels, beauty salons, and medical clinics can automate the process of accepting and managing reservations with AI voice agents. Agents can check availability, offer options, confirm reservations, and send reminders. This significantly reduces administrative burden and error rates.

3. Outbound Sales Calls and Offers (Telemarketing):

Although initially associated with humans, AI voice agents are increasingly being used in outbound sales calls. They can make initial qualifying calls, pitch simpler products or services, conduct surveys, and collect data. This allows sales teams to focus on potential customers who have already shown interest, increasing conversion rates. However, it is important to ensure that the AI agent’s calls are not intrusive and make it clear that they are an automated system.

4. Information and Feedback Collector:

AI voice agents are effective tools for conducting market research, conducting customer satisfaction surveys, and collecting feedback. They can ask questions, record responses, and even analyze tone of voice to identify customer emotions. The data collected can then be used to develop products and services and improve the customer experience.

5. Internal Communication and Workflow Automation:

In addition to external uses, AI voice agents can also assist in internal communications. They can answer employees’ recurring questions to the HR department, provide information about company policies, or direct internal calls. This helps reduce administrative burden and improve internal efficiency.

Efficiency Gain and Cost:

The main advantage of AI voice agents is scalability and 24/7 availabilityThey can handle significantly higher call volumes than human workers and are available 24/7, regardless of holidays or business hours. This leads to significant cost savings, as the need for a large customer support team is reduced and the need to pay extra for evening or weekend work is eliminated.

Cost varies depending on the complexity of the services offered, call volume, integration needs and the chosen platform. As a rule, service providers offer different packages that can start from a few tens of euros per month for smaller companies to thousands of euros for large companies that need customized solutions and high call volumes. Pricing is often based on the number of minutes of calls or the number of transactions. In addition to the monthly fee, one-time setup fees and costs for specific integrations may be added. In the long term, the return on investment of AI voice agents is significant due to labor cost savings and improved service quality.

How to build your own AI voice agents?

One option is to use Vapi.ai is an innovative platform designed for developers and enterprises to create and manage real-time, human-like AI agents. Its primary goal is to enable smooth and natural conversations with AI that are realistic enough to be difficult to distinguish from human-to-human conversations. Vapi.ai solves the challenge of creating AI speech systems that do not suffer from high latency and unnatural speech flow, instead providing fast response and smooth dialogue.

How does Vapi.ai work?

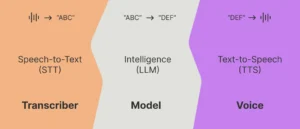

Vapi.ai acts as an integration platform that connects and optimizes the collaboration of three main modules: transcription module (listener), model (brain) and voice module (speaker)The efficiency of the system is based on low latency and smooth data flow management at each stage.

The process is as follows:

Listening (receiving raw audio – Speech-to-Text or STT):

- When a person speaks, a client device (such as a laptop or phone) records the raw audio signal.

- This raw audio is then passed to a transcription module. Transcription can take place either on the client device itself or on a dedicated server.

- The transcription module converts speech input into written text (STT or Speech-to-Text).

Model (Brain) Startup (Intelligence or LLM):

- The resulting transcribed text is then fed into a large language model (LLM).

- LLM is the “brain” of the Vapi.ai system, which processes the received text, understands the context of the conversation, and generates an appropriate response in text form. It is the intelligent part of the AI agent that simulates human thinking and decision-making.

Speaking (text → raw audio – Text-to-Speech or TTS):

- The text response generated by the LLM is transmitted to the voice module.

- The voice module converts this text back into raw audio that can be played on the user's device. This process can also take place either on the user's device or on the server.

- Vapi.ai uses advanced Text-to-Speech (TTS) technologies to make the generated speech as natural and human as possible, taking into account tone, intonation, and rhythm.

This entire process – speech to text, text processing, and text back to speech – happens in real-time and with extremely low latency (ideally less than 500-700 ms). Vapi.ai optimizes this flow, manages scaling and data streaming, and coordinates the conversation to ensure a smooth and responsive communication experience. It is important to note that Vapi.ai allows users to swap these three modules with different providers (e.g. OpenAI, Groq, Deepgram, ElevenLabs, PlayHT), providing flexibility and the ability to customize the system to specific needs and preferences.

What are the main features of Vapi.ai?

Vapi.ai offers several key features that make it a powerful tool for building voice AI solutions:

- Real-time, human-like conversation: The main advantage of the system is its ability to provide smooth and fast conversations that are extremely close to human speech. This reduces unnatural pauses and significantly improves the user experience.

- Modular architecture: The ability to switch between STT, LLM, and TTS service providers gives developers tremendous flexibility. This means you can choose the best and most cost-effective services for each component based on your needs.

- Low latency: Vapi.ai is designed to operate with minimal latency, which is critical for smooth and natural conversations. The system is optimized so that the entire call-to-call cycle takes less than a second.

- Orchestration and Scaling: The platform manages a complex process that involves synchronizing multiple modules, streaming data, and scaling the system to support large call volumes.

- Tool Integration (Tool Calling): AI agents can use external tools and APIs during a conversation to retrieve data or perform actions (e.g., make reservations, search for information).

- Multilingualism: The platform will likely support multiple languages, allowing for the creation of global AI speech solutions.

- Developer-friendly API: Vapi.ai offers a clear and easy-to-use API that allows developers to quickly integrate speech AI functionality into their applications.

Vapi.ai is the perfect solution for businesses looking to automate customer service, sales, reservations, or other voice-based interactions while providing a human-like and seamless experience for customers.

Practical example: AI dentist booking system

To understand the potential of Vapi.ai, I decided to create something practical: an AI agent that helps a dental clinic book appointments for patients. The goal was simple: a person calls the clinic and the AI assistant guides them through the booking process, checks available times directly from Google Calendar, and confirms the booking.

How did it work technically?

The system I built used the following components via the Vapi.ai platform:

- Brain (Language Model): OpenAI and its powerful

GPT-4omodel. This is the part of the AI that thinks, makes decisions, and generates responses according to instructions. - Voice (Text-to-speech): Microsoft Azure platform and Estonian

Anuvoice (Et-EE). This gave the AI a pleasant and understandable Estonian voice. - Ears (Speech Recognition): Also Azure

En-EEa transcription service that converted a caller's speech into text that could be processed by AI. - Memory and Tools (Integrations): Vapi.ai built-in

Google Calendara feature that allowed AI to check calendar availability and make new entries. In additionappGetDateTimeso that the AI always knows what the current date is.

AI Guidance: “Prompt” or Collection of Commands

The most important part, however, was the instruction or “prompt” given to the AI. It is like a script or set of rules that the AI must follow. The Dental Clinic Booker’s Guide I created looked like this (in abbreviated form):

- Role: The AI had to know that he was the booker at the Dental Clinic and had to speak Estonian.

- Process: The guide described step-by-step what the AI needs to do:

- Identify the current date.

- Ask about the desired service (teeth cleaning or dental treatment) and its duration.

- Ask for a preferred time and day, ensuring it is in the future.

- Check whether the time fits the clinic's opening hours (ER, 09:00-22:00) and take into account the duration of the service (so that the last time is not too late).

- Use

Google Calendar Check Availabilitytool to check if time is available. - If the time is not available, offer alternatives or ask for a new time.

- If the time is available, ask for the name and number, confirm the information and book the time

Google Calendar Create Eventwith a tool.

- Tone: The guideline also stipulated that the AI must be friendly, concise, free-flowing, and focused solely on booking.

This shows how detailed the behavior of AI can be directed. It is not just a “talking machine”, but a system that follows precise business rules and procedures.

Why Are Such AI Voice Agents Useful?

The benefits of systems like the booking agent built with Vapi.ai are multifaceted:

- 24/7 Availability: The AI assistant doesn't sleep or need breaks. Customers can make reservations at any time, even outside of normal business hours.

- Efficiency and Cost Savings: AI can handle a large number of calls simultaneously and handle routine tasks, freeing up human workers' time for more complex and valuable tasks. One minute of call costs somewhere around ~$0.10 /minute or 6$/hour.

- Less Waiting Time: Customers no longer have to wait in line. AI responds immediately and starts the process.

- Fewer Human Errors: When AI is set up properly, it will always follow rules and procedures, reducing booking errors.

- Better Customer Experience: Fast, easy, and always available service significantly improves customer satisfaction.

- Data Collection and Analysis: AI can collect valuable data about bookings, preferences, and issues that can be used to improve service.

- Easy Integration: Platforms like Vapi.ai make integrating AI into existing systems surprisingly easy, through make.com It is possible to build very complex systems on the portal.

The Future of Voice Chat is Here

My experiment with creating an Estonian-language AI booking system on the Vapi.ai platform clearly shows that powerful and useful AI voice agents are no longer a distant fantasy. They are practical tools that can be implemented today in various fields – from customer service and reservations to education and healthcare.

Vapi.ai and similar platforms are democratizing AI development, allowing smaller companies and even individuals to create intelligent voice assistants that speak our language and solve our problems. The future where we speak to machines as naturally as we do to people is closer than we think, and it offers exciting opportunities for businesses and consumers alike. It’s time to start thinking about how you can use AI voice agents in your life or business!

What did I learn from this?

Building this Estonian-language AI voice agent on the Vapi.ai platform was more than just a technical experiment – it was an extremely educational journey. I learned firsthand how important details are and how quickly small oversights can lead to unexpected results. Here are two key lessons this project taught me:

Everything is based on a well-thought-out and detailed guide, or prompt. This is the brain and conscience of AI. It became clear to me that AI does not “think” or “guess” anything – it follows exactly the instructions it is given. If the instructions are unclear or skip some steps, that is exactly what the AI will do. For example, in a previous experiment, the AI was so eager to book an appointment that forgot to check Google Calendar to see if the requested time was available. The result was a double booking – a situation that every customer service representative would like to avoid. This confirmed that prompt must be ironclad: every step, every rule, and every control function must be clearly written. You must tell the AI not only what it must to do, but also what he must not to do and how to behave in every situation.

Naturalness matters – AI must speak like a human. While AI can be technically great, it’s crucial for the user experience that the interaction feels as human and free as possible. The AI tended to use stiff and robotic expressions that people don’t use that often in everyday speech, such as: “Wait a second” or “It’ll only take a second.” I realized that the AI needs to be able to mimic the natural flow of a conversation to make the caller feel comfortable. Next time, I need to be more thorough.

In conclusion This experiment showed that creating a successful AI voice agent requires both technical acumen and a deep understanding of human interaction and process logic. Good prompt and natural language are key factors that distinguish a system that just works from a truly useful and enjoyable AI assistant. It's a continuous process of learning and improvement.

You can watch and listen to the AI voice call booking process in the video below. Currently, test calls were made through the web solution, but you can also add your number through Twilio.

If you have any questions, feel free to contact us!